From simple web crawlers of the early 90s to groundbreaking innovations in AI-driven algorithms, search engine optimization (SEO) has experienced a rapid evolution over the past three decades. Throughout this journey, SEO has become increasingly complex and ever more fundamental to modern digital marketing strategies.

Whether you’re new to content creation or an experienced content manager looking for ways to maximize your online reach, understanding the history of search engines and SEO is key when building compelling content that audiences will engage with.

It’s fascinating to look back at the evolution of SEO and explore where we are now as well as what other changes might be on the horizon. So let’s dive deep into the history of search engines and how SEO has evolved over time!

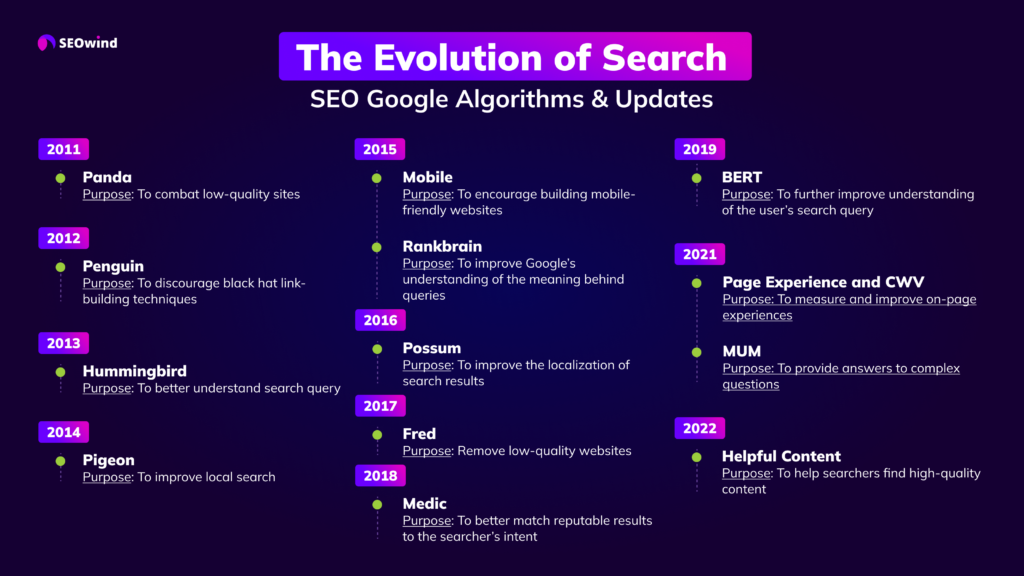

SEO Timeline

For those who like to get straight to the point, we prepared the infographic showing how the evolution of search took place, presenting SEO Google Algorithms and Updates starting from 2011 till now.

If you would like to find out more keep reading and enjoy finding out all the details.

The History Of Search Engines

First things first. Before we go into SEO evolution, let’s start with the history of search engines.

It may seem like a little early to write about the history of search engines. After all, these technical or digital tools have only been here for 45 years, approximately.

But before history can be written, the question must be asked, what is a search engine?

To put it simply, a search engine accepts a user query and then does an exhaustive search of the internet for articles, videos, forums, images, and other data related to this input. These digital tools use algorithms to find the different pages, and then organize and show them on what is called a results page. Once you get the results, you scroll through the different websites, etc., to find the best information for your search topic.

In other words, a search engine is your information helper who does the hard work for you.

Bear in mind that the early search engines were not like the search engines of today. They were very primitive and did not have as many features as the present-day models.

Plus, they did not have a lot of websites or web pages to search. Usually, the information these early search engines found were on the File Transfer Protocol (FTP) sites.

These were manually maintained and did not contain a lot of information. They were also consisting of shared files and not the files you see today on your search engine results page.

But like anything else, once the product was invented, other brilliant people joined in and refined it. That is what happened to the search engines, as you will soon see.

When Was The First Search Engine Created?

Simple question, no simple answer.

This is a matter of debate.

- Most websites documenting the history of the search engine would state that Archie was the first one. Technically, they would be correct as it was a search engine. However, Archie was designed to search FTP websites, not the world wide web. It was built in 1987 at McGill University (still some websites may say that Archie was built and launched around 1990).

- After Archie came Jughead or W3Catalog, which was created by Oscar Nierstrasz at the University of Geneva in September 1993. That same year, and 2 months later, saw the creation of Aliweb (Archie Like Indexing for the Web). This was what people have declared to be the first world wide web search engine.

- Some people may list Veronica ahead of Jughead as it was supposed to have been created in 1992. But all this search function did was search Searched File Names and Titles that were stored in Gopher Index Systems. It may not qualify as a real search engine.

- 1993 was a busy year for the creation of search engines in their most primitive form. There was also World Wide Wanderer, JumpStation, and RBSE spider that was developed around the same time. One of the drawbacks to these early systems was that no results were found unless you had exact titles in your keyword search.

- Then 1994 was another hectic year as Infoseek, EINet Galaxy, and Yahoo were brought into existence.

- Not to be outdone, 1995 saw the creation of LookSmart, Excite, and AltaVista. AOL bought WebCrawler that year, and Netscape turned to Infoseek as their default search engine.

- After that, in 1996, the internet saw the addition of Inktomi: HotBot, Ask.Com, or AskJeeves to the growing list of search engines.

- 1996 is famous for another creation as well. BackRub was invented, and it may have been the first search engine to use backlinks. What makes this little search engine notable is that it is the foundation from which Google sprang.

Other claims to fame for BackRub included being the first to rank websites based on the authority and also ranking them according to citation notation. These were the 90s search engines that helped build the current crop of search engines everyone uses today.

Who Invented The Search Engine?

When it comes to history, there is always a lot of debate over the actual date of invention. Sometimes this debate flows over to who created what.

- For example, many websites only list the University names where the invention or creation took place. Other websites will provide the actual name of the student or professor who was the main person involved. For example, Archie is said to be created at McGill University on one website. But on another website, the student’s name who created Archie is listed as Alan Emtage.

- More people remember the name of the inventor of the world wide web, Sir Tim Berners-Lee, who worked at CERN in Switzerland than they would remember the name of the creator of the World Wide Wanderer, Matthew Gray.

- Or they may remember the names Bob Kahn and Vint Cerf, who in 1974 invented the TCP/IP technology that the internet can’t do without today. They will remember those two men as they now work for Google but won’t remember who created Jughead, Oscar Nierstrasz.

In essence, it is better to say that there were a group of individuals that were able to improve on existing designs and technology and who invented the search engine of the 90s.

All the men listed above had a hand in developing the modern search engines that rank websites and help you find the information you need quickly.

When Did SEO Begin? SEO background

There is the legend, and here is the reality. Most people may go for the legend as it is more interesting and provides a link to rock music.

So let’s go with that.

The legend has it that the manager of the famous rock group Jefferson Starship was upset at the low ranking his band received on different search engines.

As the story goes, he was the one behind the development of SEO or search engine optimization. The year he got started was prior to the officially recognized start of SEO practices.

The official start is said to have taken place in 1997. SEO probably got its start back in 1991 when the first search engines started appearing on the world wide web. Still, the term was not used until February of 1997 by John Audette of Multimedia Marketing Group.

It is possible that there are other stories about how SEO got started. Many people may place the credit on BackRub as it started using authority and citation notation to rank websites on its results page.

If one wants to be honest, one should say that SEO started long before 1997, and BackRub, as world wide web users had to use keywords to find the information they needed on any given topic. Keywords have always been a top SEO strategy to use, and those predate citation notations and authority by at least 6 to 10 years.

How Has SEO Evolved?

While Google has been the dominant player in SEO development, its contribution and dominance did not come about until long after SEO had evolved to a more advanced level.

There has always been a strategy employed by owners of websites to get higher visibility, and it is the strategies that changed as people kept seeing different needs that had to be met.

In the beginning, it was enough to use just keywords to get people to your website. There were not that many websites, and no one needed any real content to drive traffic to their door.

As competition grew, so did the different strategies. Keywords were no longer enough, and website owners branched out into using e-mail marketing, link building, and even paid ads on what few social media outlets existed at the time.

As time went on, another need arose. The question to answer was, now that you got the traffic, how do you keep your visitors on-site to make a purchase or fill out a form and so on? This need led to the development of great content.

But great content was not enough, so user experience became a high priority. This led to the creation of video use, images, and easy navigation to help keep the visitor onsite till they convert into customers.

These strategies are still used today, but they have been refined due to Google’s dominance and use of algorithms and other ranking criteria that places high value on different website content and usability.

SEO beginning: ‘The Wild West’: The ‘90s

The term Wild West may be misleading in regards to the 90s internet use. But at the same time, it may also be an apt analogy due to the limitations of government and tech companies at that time.

The old wild west of American history had laws, but it was difficult to enforce them. Also, sheriffs and judges invented their own laws and punishments for those they considered lawbreakers.

This situation made it easy for criminals and gunslingers to move from county to county, for when they were wanted in one county, they could go to another one where they were not wanted and live life as they wanted.

This made it very dangerous at times to live during those wild west days. The same can be applied to the internet of the 90s. Law enforcement was not universal if there were any laws dealing with specific internet activities.

Internet users had a lot of freedom in those days to use certain keywords as website titles, for example, www.whitehouse.com, which was a porn site. In the 90s, because of the infancy of the internet, not much was known, and few people were thinking not much harm could be done on the world wide web.

As governments got wise to the dangers that came with a free-wheeling internet, laws began to change, and stricter controls were placed over the users and search engines.

Probably the biggest difference between today’s internet and the 90s is not only the regulations governing the internet but that the speed was a lot slower, and there were fewer junk sites.

As technology grew and changed, so did the internet, which eventually attracted the attention of different governments who implemented more laws to reel in those free-wheeling website owners and users.

Black Hat Tactics Get a Bad Reputation: Early 2000s – 2005

When the first search engine was created, there may not have been many rules to follow. Search engines were in their infancy, and not many people had a very good idea of what was good and what was bad SEO practices.

But as the years passed and early search engines developed, rules became the norm. The rules that developed along with the search engines eventually divided SEO into the white hat and black hat divisions.

The white hat SEO was considered an ethical strategy, while the black hat SEO practices were unethical. The latter would violate the search engine rules. The people employing black hat techniques did so because of the positive results it brought to any given website using them.

If you do not think those are bad strategies, they are. Using them on your website can get you into a lot of trouble. The reason behind it is that the black hat strategies are violations of the search engine’s terms of service.

If you are caught using these strategies, your website and your business will suffer. Three common black hat tricks are:

- keyword stuffing,

- white letters on a white background, or

- buying links.

Using these different unethical techniques can make your website de-indexed, it will lose its rankings and web traffic, and you may even have to shut down your business.

What short-term gain you get from using these unethical strategies will be wiped out once Google or some other search engine discovers you are using them. What gives them their bad reputation is the fact that the search engine finds that those techniques are against the rules and create an unfair playing field for lesser websites.

To maintain a good reputation for your website, to keep your ranking and traffic, and so on, you need to practice what the search engines decide are white hat SEO strategies all the time.

The Google Revolution

Larry Page and Sergey Brin attended Stanford University or were at Stanford when they created their little search engine. It was just another search engine with a twist. They added a couple of ranking criteria to help sort the many websites that have come online in the previous 5 to 6 years.

The company was not even called Google at the time. It was called BackRub, and its founders had very big ambitious goals when they started this search engine.

These two did not invent a new product; they merely built on what had already existed and went about improving on those existing designs and operations. But two things took place that paved the way for Google, or BackRub, to become a giant in this industry.

Page Rank Formula

First, other earlier search engines were suffering from the misuse and abuse of keyword use. Some also had trouble with the content, as keyword stuffing and hidden text became the norm in the 90s.

Google’s founder, Larry Page, developed the Page Rank Formula, which helped clear up this mess and began the use of authority as a criterion for rankings. BackRub, at that time, was able to get rid of a lot of the spam that was ruining the internet.

Google Web Crawler

The second thing that took place that boosted Google to prominence was its partnership with Yahoo. The latter used Google to power its web pages, and that was all that was needed for the former search engine to become the dominant player in the internet game.

It was Google’s web crawler and Page Rank algorithm that was revolutionary and helped make Google the powerhouse it is today. Because Google decided that link-building was very important, everyone jumped on board and started the SEO link-building strategy.

That was a problem in later years that the company had to address. However, Google did not stop revolutionizing the search engine. They developed their toolbar, which provided websites’ page rank scores.

This was boosted by its appearance on Microsoft’s Internet Explorer. This does not mean that Google did not make mistakes. The original Adsense became a problem, and like link building, the company had to make changes and solve the problem they had initially caused.

The Google revolution basically altered the search engine landscape and changed the way websites were ranked.

How Have Google Algorithms Changed

Google has created a very complex algorithm system to rank websites, etc. The algorithms were designed to retrieve data from its index and post the results immediately on its website.

To do this, though, the search engine had to combine ranking factors with their algorithms to make sure the most relevant websites landed in the top spots on the search results page.

When Larry Page created PageRank in 1996, it was a revolutionary change in the ranking systems. Yet, it was 7 more years before Google made any algorithm changes.

2003 Google Florida

That was when Florida came into existence. Google designed this algorithm update to filter out websites that contained low-quality links. Unfortunately for many websites and small businesses, Google released this upgrade at Christmas which also included mislabeled links. This hurt many businesses at that time.

2004 Google Trust Rank

The next year Google introduced TrustRank. It filtered out those websites that used black hat techniques as well as spam. It also provided a trustworthy rating of different websites, so its search engine users get access to top-quality sites.

These few examples provide everyone with a little insight into how Google sought to make internet searches better for internet users. As of Sept. 2022, Google has released its latest change to its Core Algorithm.

The problem with keeping up with these changes is that Google tends to release thousands every year. It is not like the early days when they only introduced a few each year.

2010 Google Caffeine

Google Caffeine was a major update to Google’s search engine algorithm in 2010. It was designed to improve the speed, accuracy, and relevancy of search results.

The main feature of the update was its increased indexing rate, which allowed it to index web pages much faster and more accurately than before.

2011 Google Panda

The very next year, in 2011, Google rolled out its first major algorithm update, known as “Google Panda.”

This update was designed to reduce the visibility of low-quality sites in SERPs and reward higher-quality sites. It had a major impact on websites, with some sites seeing a dramatic drop in their SERP rankings. Google Panda also introduced a set of criteria for judging the quality of a website like content depth, keyword relevance, and user experience.

2011 Google Freshness

Later in the year, Google launched its Freshness update. This update focused on the timeliness of the content, meaning that Google began to value content that was more recent.

Pages that had not been updated in a while were moved down in the SERPs, while those that were more recent were given priority.

2011 Schema

In 2011, schema markup was created as a type of microdata to aid search engines in understanding the intent of a query. Schema.org displays all possible varieties of schema markup.

Rankings do not take schema into account. Schema, on the other hand, can get you visibility in search engine results pages (SERPs) by means of rich and featured snippets.

2012 Google Penguin

The Google Penguin update targeted web spam, particularly sites that were using manipulative link-building tactics to achieve higher rankings. The update impacted millions of websites, and many businesses that used questionable link-building strategies saw their rankings drop. It was the biggest update to Google’s algorithm since Panda and had a major impact on the SEO industry.

2013 Google Hummingbird

In 2013, Google released the Google Hummingbird algorithm, and it changed the way we search the internet forever. To many, Hummingbird represented the most significant alteration to Google’s fundamental algorithm since its introduction in 2001.

The algorithm was designed to improve the accuracy of search results by better understanding the natural language of queries.

Things, Not Strings. The Knowledge Graph allows you to search for things, people, or places that Google knows about and immediately get information that is relevant to your query. Google used this data to improve its search results.

When someone conducts a search for one of the billions of entities or facts stored in the Knowledge Graph, several types of “knowledge panels,” “knowledge boxes,” and “knowledge carousels” may surface.

It doesn’t just look at the keywords you type in but also the context of the query. This means it can better understand the underlying intention behind each query and provide more relevant results. It was created to better handle inquiries in natural language and conversational search.

2014 Google Pigeon

Google revolutionized the online experience for local businesses with their ‘Pigeon’ algorithm update. It made it easier than ever before to locate relevant and nearby search results. For those optimizing SEO practices, Pigeon was a powerful tool that enabled increased visibility when users searched within close proximity – allowing small business owners to soar higher than the standard competition.

2015 Google Mobile Update AKA “Mobilegeddon”

Another major update was released by Google in 2015, dubbed “Google Mobilegeddon”. This update focused on boosting the websites optimized for mobile devices. This meant that sites that weren’t optimized for mobile devices would be harder to find in search engine results – and, thus, receive fewer visitors.

Faster, Better, Contextual search results: Late 2010s – 2020

Google has made tremendous strides over the past few years to provide faster, more accurate, and more contextual search results to its users.

For starters, Google has implemented a number of speed improvements for its search engine. Pages now load faster than ever before, and Google’s algorithms have become much more efficient in delivering relevant results.

Google has also made significant advancements in artificial intelligence (AI). From voice recognition technology through image recognition to machine learning algorithms, AI is being used more and more to improve the user experience on Google’s platforms.

2015 Google Rankbrain

2015 was a big year for Google, and the introduction of Rankbrain was certainly a major moment.

Rankbrain is a machine learning system that Google uses to help sort search results. It uses artificial intelligence to analyze the results of search queries and helps Google provide more relevant results to users.

2016 Google Possum

It was designed to provide more localized results for local searches, and it can have a major impact on local search engine optimization (SEO) efforts.

At its core, Possum is designed to prevent results from overlapping too much and help local businesses better compete with national or international businesses. It takes into account the physical address of the searcher and the address of the business in question when presenting results.

2017 Google Fred

This algorithm was designed to further punish ineffective websites that contained an excessive number of advertising and a low number of quality pieces of content.

If your website has been using unethical search engine optimization strategies, you most likely noticed a drop in traffic after Google adopted Fred.

2018 Google Medic

This algorithm helps rank websites with medical authority, such as the Cleveland Clinic and the Mayo Clinic, above those that do not have any medical authority at all.

Whoever asked Dr. Google a variety of medical inquiries and received answers is aware of how crucial it is to have reliable medical sources in your search results.

2019 BERT

Not all changes, though, can be noticed, but they are effective, and all try to improve the search efforts of the internet user. One example is that the 2019 BERT change was meant to improve on the 2013 Hummingbird change.

BERT was also designed to look at search intent as well as long-tail keywords. BERT was to prioritize these SEO strategies.

2019 Rich Snippet Review

Featured Snippets are a special type of search result that Google displays at the top of its search engine results page. These snippets are usually pulled from a website’s content and feature a summary of the answer to a user’s query, as well as a link to the original website.

Everyone wants to be in them, but few know how to make it happen.

But if you do manage to get into a Featured Snippet, you’ve hit the jackpot! Any search engine query can potentially have a Featured Snippet next to it, so your content can get great exposure.

But getting there isn’t easy, and it requires a lot of trial and error.

But if you can do it, you’ll reap the rewards in no time.

2019 Google’s Core Quality Updates

Google’s core updates have been a major part of its algorithm since it was first introduced in 2000. Google’s core algorithm consists of a set of rules and guidelines that determine which websites appear for a given search query. These updates are released periodically to keep the search engine results up-to-date and relevant.

Google’s core updates are designed to improve the relevancy of search results by making sure that the most relevant content shows up first.

Google wants to ensure that users are getting the best possible results for their queries, so having quality content is essential for a successful website. This includes content that is well-structured, properly formatted, and contains accurate information.

Better user experience, context, and value: 2020 till now

2021 Page Experience and Core Web Vitals (CWV)

Google is striving to create a more enjoyable web experience for all users, regardless of the browser they use or device type.

It looks at page experience on how easy and valuable users find their time on your website, regardless of if your website provided the information they were after. They are measuring page performance based on five key elements; mobile friendliness, safe browsing, HTTPS security protocol compliance, and intrusive interstitials as well as Core Web Vitals.

Core Web Vitals is Google’s way of measuring a website’s user experience. It evaluates the speediness with which a page loads its most important content, how easy it is to find your way around, and whether or not things like scrolling are smooth sailing.

2021 Google Multitask Unified Model (MUM)

Google is further revolutionizing its search engine capabilities with the introduction of Multitask Unified Model (MUM), a 1000 times more powerful evolution than BERT. MUM combines natural language processing and several other technologies, allowing Google to answer complex user queries and create an even better-contextualized experience for users. The goal of MUM is to use just one model trained to handle indexing, information retrieval, and ranking simultaneously.

2022 Google Helpful Content

Google introduces Helpful Content Update to further provide the best possible experience for its users. It encourages original, useful, and user-friendly material in search results. This unique signal helps ranking systems identify content that delivers what visitors expect and need — giving more visibility to those who craft engaging, informative and valuable resources for their audience. It also weeds out content that falls short of visitor expectations; ensuring people get quality information at all times.

Key Takeaways

Search engines have come a long way since the early days of the internet. They continue to evolve and change systematically. As Google makes updates to its algorithm, it’s important for us to stay on top of these changes and adapt our SEO strategies accordingly. You might be wondering, where will the future of search take us? Only time will tell. But one thing is for sure: the world of SEO is always changing and evolving. And we need to be prepared for whatever comes next.